Hey, did you know you can remove sections of Google, Bing, and other websites you don’t like on desktop with a simple toggle switch? We built Ultimasaurus the Chrome extension that puts a ton of customizations and tools in your hands for free to help boost your productivity. Don’t like AI Overviews? Toggle them off, want to try a search with them? Toggle them back on and refresh the page easily and seamlessly. Tons of other features like “Focus Mode” where all social media sites are turned off, remove nagging YouTube popups, take screenshots, and eliminate stories on Facebook: Get it on the Chrome Store

Most publishers and website owners are not aware that there are ways to keep Google from effectively stealing your content for their AI systems and giving that content to users without your consent or sending you traffic. However, all of the options available could cause major problems for your website and most websites who are aware of these methods are not implementing them out of fear of the negative consequences that might come with them.

Method #1 – Google Extended

Google introduced a new command for Robots.txt called “Google Extended” in 2023. A poorly name, poorly documented, and confusing command; when Google launched this they said it would apply to Bard and Vertex APIs and other future AI systems but the documentation has not been properly updated to assure users this works in Gemini, AI Overviews, and AI Mode as well as other parts of Google (there is a page on Google’s documentation that clarifies this but it is hard to find).

Google also failed to mention that using the Google-Extended command doesn’t pull your old content out of their training set or keep your competitors from easily stealing your unique content.

This isn’t a scalpel either, it’s a hatchet as it is at the robots.txt level and not the page or section of a page level. While Robots.txt could be used this way, in general it has been used to manage sections of sites based on folder structure for over a decade, not at a page by page level. which means most implementations of this will remove their whole site from AI training or large parts of it.

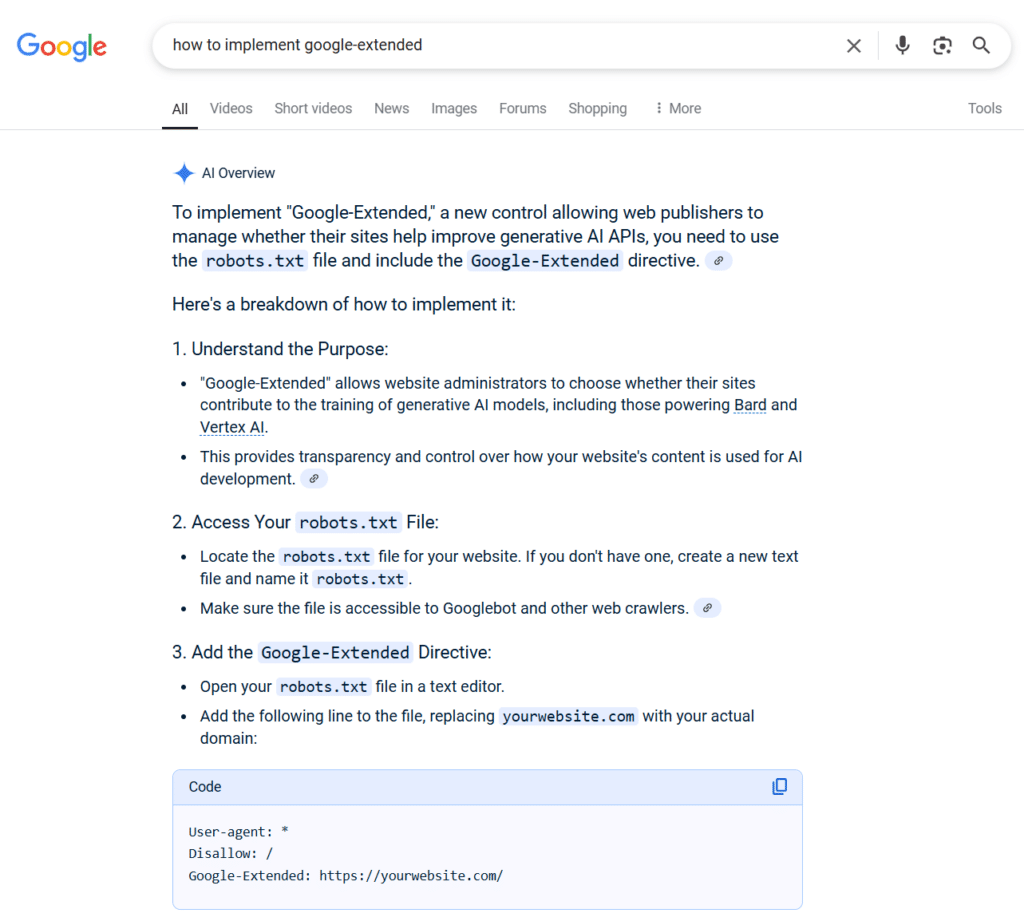

Worse yet, if you ask Google’s AI Overviews about this by typing a long-form query into Google you’ll also get a response that could devastate your website if you’re not careful. Google’s AI Overview recommends you disallow your entire site from Google which would remove 100% of your site from SERPs over the next few days.

Finally, Google’s documentation started poor and hasn’t been updated well since they launched it. The original blog post for example doesn’t link to a page that mentions Google-Extended at all even though the anchor text is “Google-Extended” and instead links to a general crawl overview, the words in the blog post mention Bard and Vertex not Gemini, and the internal search on Google’s documentation site tries to keep users from finding data on Google-Extended by saying there are no matching resources even though there are. It almost feels like they either want you to harm yourself really bad or fail to find the information you need to properly implement this.

To use this tag simply add this line to your website’s Robots.txt file under the first user-agent mention and/or under a user-agent mention specifically for Google.

Google-Extended: https://www.yoursitehere.com/Or maybe this command (Google gives both as examples) – We recommend using this option since it looks more accurate in terms of Robots.txt

User-agent: Google-Extended

Disallow: / Pros:

- Your content can no longer be used to train Google’s AI systems.

- You can block content based on folders / sections of your site.

Cons:

- This might remove your links from AI Overviews (Google says it won’t and that AI Overviews are subject to Search Preview Controls)

- This might remove your data from any AI parts of Google’s ranking systems (no details from Google here)

- This might remove your local business from being mentioned in AI Overviews (Google says it won’t and that AI Overviews are subject to Search Preview Controls)

- This does not remove your content from Google’s training that has already been used

- Google might use this as an argument to never license your content if they legally might be required to do so in the future

- Google might use this to cause long-term harm to websites who opted out of AI training early on if they happen to introduce AI features in the future that might be useful to driving traffic

Question: Will this impact my rankings in Google Search or Google Maps?

Answer: Google has answered this, but not exactly in a straight forward manner. While they do clearly state in one document “Google-Extended does not impact a site’s inclusion or ranking in Google Search.” and in another they say that Google-Extended does not impact Google’s AI Overviews and the links that appear in them, there is little testing or evidence to back this up. The reality is we don’t know exactly how Google uses the AI training data or the ability to use AI training data in the evolving AI-first SERPs. For example we know Google has multiple AI-derived ranking signals that use their training data such as “consensus” so this would then impact rankings if Google-Extended left your content out of being considered here. The documentation also clearly says “Google Search Results” and fails to mentions News, Maps, Images, Videos, Shopping, etc… so the impact in these areas is completely unknown. For now though, the answer from Mountain View is “No”, Google-Extended should not impact your search rankings or traffic at all – only the AI Overviews stealing that data from websites.

Here is what Google says about using Google-Extended and the impact on rankings in search:

“Google-Extended is a standalone product token that web publishers can use to manage whether their sites help improve Gemini Apps and Vertex AI generative APIs, including future generations of models that power those products. Grounding with Google Search on Vertex AI does not use web pages for grounding that have disallowed Google-Extended. Google-Extended does not impact a site’s inclusion or ranking in Google Search.”

Method #2 – Disallow Command in Robots.txt

If Method #1 is a hatchet, this method is a hammer or more appropriately a bulldozer or wrecking ball. Where in Method #1 you might accomplish your goal of removing your content from being used by Google for AI training (if they are actually doing that with this command) and possibly harming yourself from future benefits (if there ever are any) in Google’s AI systems, in this method you are 100% doing both while also losing 100% of your Google organic search traffic and if not done correctly search traffic from all other engines.

To use this tag simply add this line to your Robots.txt file under the first user-agent mention and/or under a user-agent mention specifically for Google.

User-agent: Googlebot

Disallow: / Pros:

- Your content can no longer be used to train Google’s AI systems.

- If your site / data is valuable enough this might convince Google to pay money to license it like they did Reddit and StackOverflow.

Cons:

- In a few hours to a few days all content on your site will be removed from Google search.

- This impacts 100% of content on your site.

- There is no way to allow some pages to be used for training but not others.

- This will remove your links from AI Overviews (no details from Google here).

- This might remove your data from any AI parts of Google’s ranking systems (no details from Google here).

- This might remove your local business from being mentioned in AI Overviews (no details from Google here).

- This does not remove your content from Google’s training that has already been used.

- Google might offer less to license your content than you made from the search traffic – if they do at all.

As you can see neither option is amazing since it might lead to even less traffic from Google in the short run. Unless you find yourself with a highly unique and highly valuable data set or user set, neither of these options offers much hope.

We have begun blocking Google’s AI training here on our site using the Google-Extended robots.txt command as a test and I recommend all bloggers and publishers harmed by recent updates or content theft by Google do the same until the incentives are realigned in a way that encourages participation.

References

“An update on web publisher controls” by Danielle Romain VP, Trust at Google published on Sep 28th, 2023: https://blog.google/technology/ai/an-update-on-web-publisher-controls/

“Google’s Common Crawlers” by Google for Developers last updated March 6th, 2025: https://developers.google.com/search/docs/crawling-indexing/google-common-crawlers

“AI Overviews and Your Site” by Google Search Central last updated on February 11th, 2025: https://developers.google.com/search/docs/appearance/ai-overviews

“Our AI Principals” by Google: https://ai.google/responsibility/principles/